Why in News?

Recently, in two significant rulings, US courts sided with tech companies developing generative AI, addressing for the first time whether training AI models on copyrighted content constitutes “theft.”

Generative AI tools like ChatGPT and Gemini rely on massive datasets—including books, articles, and internet content—for training. While various lawsuits have been filed accusing tech firms of copyright infringement, the companies argue their use of content is “transformative” and qualifies as “fair use.”

Though the two court decisions arrived via different legal paths, both support the tech companies’ stance, potentially setting an important precedent for future cases.

These rulings highlight the growing legal acceptance of using copyrighted material in AI training under fair use—provided the output serves a transformative, public-interest purpose.

What’s in Today’s Article?

- Case 1: Writers vs Anthropic – Court Rules in Favour of AI Developer

- Case 2: Writers vs Meta – Court Sides with Meta, But Flags Compensation Concerns

- Ongoing and Escalating Legal Battles

- Significance of the Rulings: A Win, But Not the Final Word

Case 1: Writers vs Anthropic – Court Rules in Favour of AI Developer

- In August 2024, writers Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson filed a class action lawsuit against Anthropic, creator of the Claude LLMs.

- They alleged that Anthropic used pirated versions of their books without compensation, harming their livelihoods by enabling free or cheap content generation.

- Court's Decision: Fair Use Applies

- Judge of the Northern District of California ruled in favour of Anthropic, stating that the AI's training use was “fair use.”

- The doctrine of fair use in copyright law allows limited use of copyrighted material without permission from the copyright holder.

- It allows use for purposes such as criticism, comment, news reporting, teaching, scholarship, or research.

- He emphasized the transformative nature of the process, noting that the AI did not replicate or replace the original works but created something fundamentally new.

- Key Quote - The judge wrote: “Like any reader aspiring to be a writer, Anthropic’s LLMs trained upon works… to create something different.”

Case 2: Writers vs Meta – Court Sides with Meta, But Flags Compensation Concerns

- Thirteen authors filed a class action lawsuit against Meta, seeking damages and restitution for allegedly using their copyrighted works to train its LLaMA language models.

- The plaintiffs argued Meta copied large portions of their texts, with the AI generating content derived directly from their work.

- Court’s Decision: No Proven Market Harm

- The Judge ruled in Meta’s favour, stating the plaintiffs failed to show that LLaMA’s use of their works harmed the market for original biographies or similar content.

- While affirming the transformative potential of AI, the Judge noted that companies profiting from the AI boom should find ways to compensate original content creators, even if current use qualifies as fair use.

Ongoing and Escalating Legal Battles

- Anthropic faces a separate lawsuit from music publishers over copyrighted song lyrics.

- Meta and other tech companies remain entangled in numerous other copyright disputes.

- Twelve copyright lawsuits—including one from The New York Times—have been consolidated into a single case against OpenAI and Microsoft.

- Publishing giant Ziff Davis is also suing OpenAI separately.

- Visual creators have sued platforms like Stability AI, Runway AI, Deviant Art, and Midjourney for unauthorized use of their work. Getty Images is suing Stability AI for copying over 12 million images.

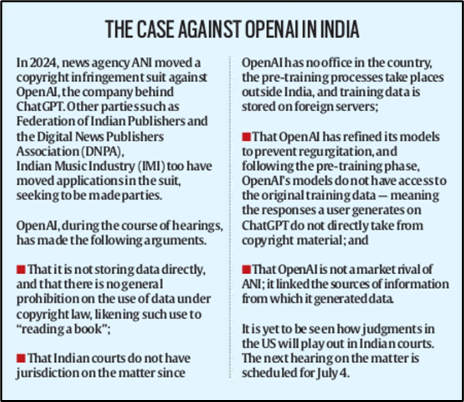

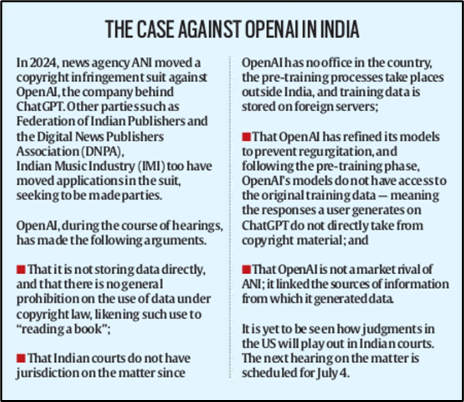

- Indian Media’s Legal Challenge

- In 2024, ANI filed a case against OpenAI for misusing Indian copyrighted content.

- Major Indian media houses like The Indian Express, Hindustan Times, and NDTV have joined through the Digital News Publishers Association (DNPA), hinting at rising domestic litigation.

Significance of the Rulings: A Win, But Not the Final Word

- The court rulings favour Anthropic and Meta by upholding their use of copyrighted material under the “fair use” doctrine.

- However, both companies still face unresolved legal challenges—particularly for sourcing training data from pirated databases like Books3.

- While courts may allow AI’s current training practices, unresolved concerns remain: How will creators be protected? What happens to livelihoods and creativity as AI output grows?

Conclusion

These rulings mark a significant moment in the evolving legal landscape of AI, but they don’t resolve the fundamental copyright and ethical dilemmas surrounding AI-generated content.