Why in News?

- At the end of a two-day meeting in Japan, the digital ministers of the G7 advanced nations recently agreed to adopt "risk-based" regulation on artificial intelligence (AI).

- The decision comes amid European lawmakers are in a hurry to introduce an AI Act to enforce rules on emerging tools such as ChatGPT - a chatbot (developed by OpenAI) that has become the fastest-growing app in history since its launch.

What’s in Today’s Article?

- What is the Group of Seven (G7)?

- Artificial Intelligence (AI)

- News Summary Regarding AI Regulation

What is the Group of Seven (G7)?

- It is an intergovernmental informal political forum of 7 wealthy democracies formed in 1975.

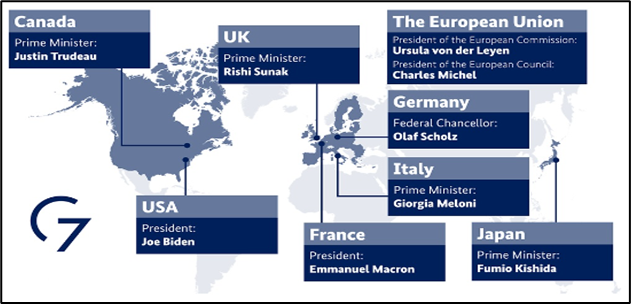

- It consists of Canada, France, Germany, Italy, Japan, the United Kingdom and the United States.

- It is officially organised around shared values of pluralism and representative government, with members making up the world’s largest International Monetary Fund (IMF) advanced economies in the world.

- The heads of government of the member states, as well as the representatives of the European Union (non-enumerated member), meet at the annual G7 Summit.

- As of 2020, G7 accounts for over half of global net wealth (at over $200 trillion), 30 to 43% of global GDP and 10% of the world's population.

Artificial Intelligence (AI):

- Artificial intelligence (AI) is the ability of a computer or a robot controlled by a computer to do tasks that are usually done by humans because they require human intelligence and discernment.

- The term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience.

- AI algorithms are trained using large datasets so that they can identify patterns, make predictions and recommend actions, much like a human would, just faster and better.

Need to regulate AI

- Issues with AI

- Artificial Intelligence is already suffering from three key issues – privacy, bias and discrimination.

- Regulator vacuum

- Currently, governments do not have any policy tools to halt work in AI development. If left unchecked, it can start infringing on – and ultimately take control of – people’s lives.

- Increased use of AI & privacy

- Businesses across industries are increasingly deploying AI to analyse preferences and personalize user experiences, boost productivity, and fight fraud.

- For example, ChatGPT Plus, has already been integrated by Snapchat, Unreal Engine and Shopify in their applications.

- This growing use of AI has already transformed the way the global economy works and how businesses interact with their consumers.

- However, in some cases it is also beginning to infringe on people’s privacy.

- Fixing accountability

- AI should be regulated so that the entities using the technology act responsible and are held accountable.

- Laws and policies should be developed that broadly govern the algorithms which will help promote responsible use of AI and make businesses accountable.

News Summary Regarding AI Regulation:

- The G7 ministers’ agreement sets a landmark for how major countries govern AI amid privacy concerns and security risks.

- Such regulation should preserve an open and enabling environment for the development of AI technologies and be based on democratic values.

- However, the policy instruments to achieve the common vision and goal of trustworthy AI may vary across G7 members.

- The ministers plan to convene future G7 discussions on generative AI which could include topics such as governance, how to safeguard intellectual property rights including copyright, etc.

- Japan, the chair of 49th G7 annual summit, has taken an accommodative approach on AI developers, pledging support for public and industrial adoption of AI.

- Japan hoped to get the G7 to agree on agile or flexible governance, rather than preemptive, catch-all regulation over AI technology.