Why in news?

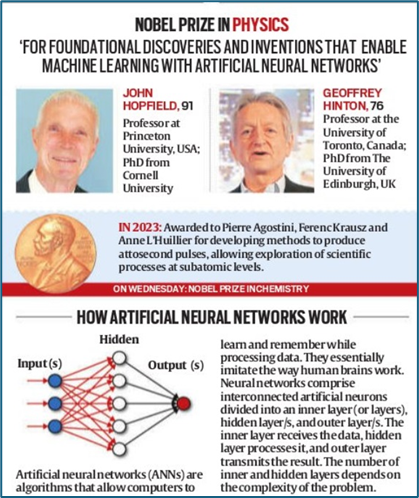

The 2024 Nobel Prize in Physics was awarded to John Hopfield and Geoffrey Hinton for their foundational contributions to AI, particularly in machine learning and artificial neural networks.

Their ground-breaking research in the 1980s laid the foundation for the AI revolution unfolding today.

What’s in today’s article?

- Machine learning

- Deep Learning

- Artificial Neural Network (ANN)

- Works of Noble Prize winners

Machine learning (ML)

- About

- ML is a subset of artificial intelligence (AI) that enables computers to learn from and make decisions based on data without being explicitly programmed for each task.

- In machine learning, algorithms identify patterns in large datasets and use these patterns to make predictions or perform specific tasks.

- The key idea is that systems improve their performance over time through experience, by training on data.

- Applications of Machine Learning:

- Image and speech recognition

- Recommendation systems (like those used by streaming services)

- Fraud detection

- Healthcare diagnostics

- Autonomous vehicles

Deep Learning (DL)

- About

- Deep Learning is a specialized subset of machine learning that focuses on using artificial neural networks with multiple layers (hence "deep").

- It mimics the structure and function of the human brain to recognize complex patterns in large datasets, such as images, text, or sound.

- Deep learning has been pivotal in advancing AI technologies, particularly in areas like image recognition, natural language processing, and self-driving cars.

- Key Applications of Deep Learning:

- Image and speech recognition (e.g., face detection, virtual assistants)

- Autonomous vehicles (e.g., self-driving cars)

- Natural language processing (e.g., language translation)

- Medical diagnostics (e.g., cancer detection in medical imaging)

- ML Vs. DL

- While machine learning involves training algorithms with structured data and often requires human input for feature extraction, deep learning automates feature discovery using multi-layered neural networks, making it more powerful for complex tasks, especially when large datasets are available.

Artificial Neural Network (ANN)

- About

- ANN is a mathematical model that uses a network of interconnected nodes to mimic the human brain's neurons and process data.

- ANNs are a type of machine learning (ML) and deep learning that can learn from mistakes and improve over time.

- They are used in artificial intelligence (AI) to solve complex problems, such as recognizing faces or summarizing documents.

- Key features of ANNs

- Structure

- ANNs are made up of layers of nodes, each containing an activation function. The nodes are interconnected, with each node in a layer connected to many nodes in the previous and next layers.

- Learning

- ANNs are adaptive and learn from their mistakes using a backpropagation algorithm.

- They modify themselves as they learn, with inputs that contribute to the right answers weighted higher.

- Output

- The output of the ANN is produced by the final layer of nodes. The output is usually a numerical prediction about the information the ANN received.

- Applications of Artificial Neural Networks:

- Image and video recognition (e.g., facial recognition systems)

- Speech recognition (e.g., virtual assistants like Siri and Alexa)

- Natural language processing (e.g., language translation)

- Medical diagnostics (e.g., detecting diseases from medical images)

- Autonomous vehicles (e.g., self-driving car navigation)

- In essence, artificial neural networks mimic the brain’s ability to learn from experience, adapt, and recognize complex patterns, making them foundational to modern AI and machine learning systems.

Works of Noble Prize winners

- Hopfield's contribution - Mimicking the Brain with Neural Networks

- Hopfield's major breakthrough was creating artificial neural networks that mimic human brain functions like remembering and learning.

- Hopfield's network processes information using the entire structure rather than individual bits, unlike traditional computing.

- It captures patterns holistically, such as an image or song, and recalls or regenerates them even from incomplete inputs.

- This breakthrough advanced pattern recognition in computers, paving the way for technologies like facial recognition and image enhancement.

- His research was inspired by earlier discoveries in neuroscience, notably Donald Hebb's work on learning and synapses in 1949.

- Hinton’s Contribution - Deep Learning and Advanced Neural Networks

- Hinton advanced Hopfield’s work by developing deep neural networks capable of complex tasks like voice and image recognition.

- His method of backpropagation enabled these networks to learn and improve over time through training with large datasets.

- Backpropagation, short for "backward propagation of errors," is an algorithm for supervised learning of artificial neural networks using gradient descent.

- His contributions led to major advancements in AI technologies, including modern applications such as speech recognition, self-driving cars, and virtual assistants.

- Hinton's deep learning networks made a significant impact at the 2012 ImageNet Visual Recognition Challenge, where his team's algorithm dramatically improved image recognition technology.

- His work demonstrated the vast potential of AI in various fields, including astronomy, where machine learning helps researchers analyze vast amounts of data.

- Conclusion

- Both Hopfield and Hinton have made pioneering contributions to the development of AI, with Hopfield bridging neuroscience, physics, and biology, and Hinton revolutionizing computer science.

- Their work has shaped modern AI technologies, making them deserving recipients of the Nobel Prize in Physics.